PIVOTAL CLOUD FOUNDRY (PCF) PAAS PLATFORM INSTALLATION USING CONCOURSE ON AWS CLOUD

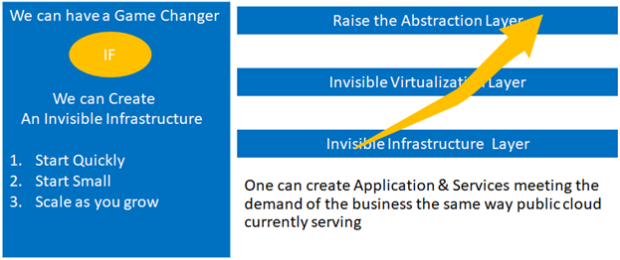

Pivotal Cloud Foundry (PCF) PaaS Platform is a simple and great platform from a developer as well as Day 2 operation perspective.

Since long time Java Spring dominated the Java world, Millions of Java developers preferred and developed millions of lines of codes and applications using Pivotal’s Spring framework.

Now Pivotal’s Spring Cloud framework including Spring Boot and other Cloud native application frameworks has taken the cloud-native application development to another height of simplicity, with the Spring Cloud framework many server codes are embedded inside the code, just by writing few lines of code one can implement complex servers which otherwise required the help of a systems engineer or infrastructure engineer to get involved.

Pivotal Cloud Foundry is now the best platform for any cloud-native application which adhered to the 10-factor app principles. Because of the following advantages provided by the platform.

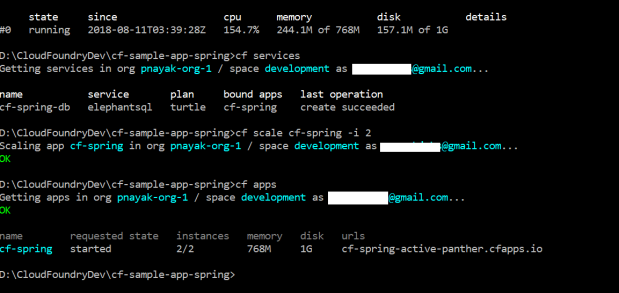

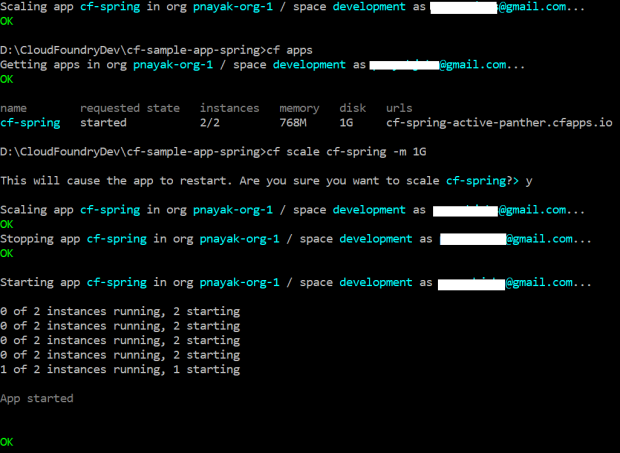

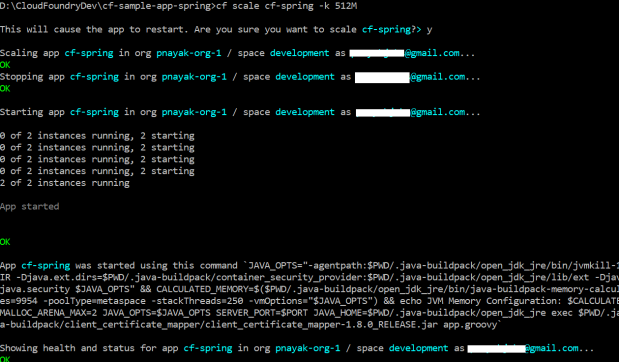

- Developers don’t need to need to know anything related to infrastructure on which their code is going to run.

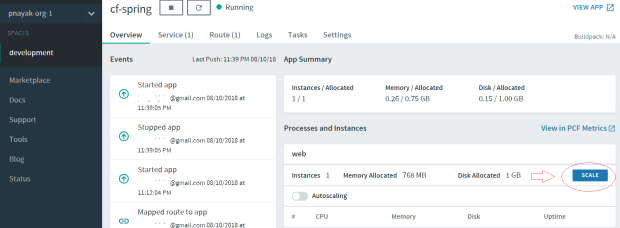

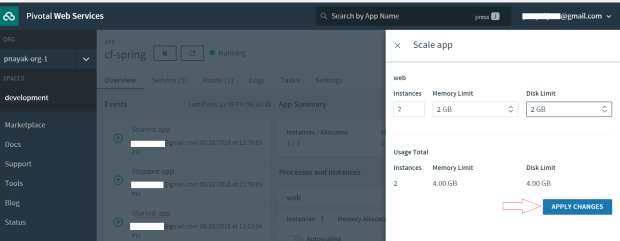

- They don’t need to be bothered how the high availability of the application is going to be implemented.

- They don’t need to bother how the scalability of the application is taken care of.

- Developers can test their code in an environment which is exactly the same as the production environment.

- As a developer don’t need to spend time nor wait for infrastructure implementation nor the day 2 operation, they can be focused on their code development.

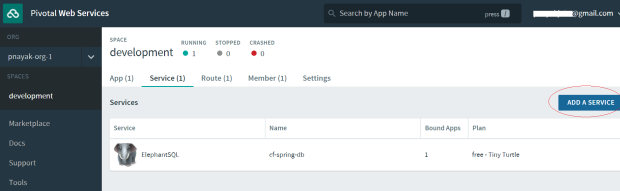

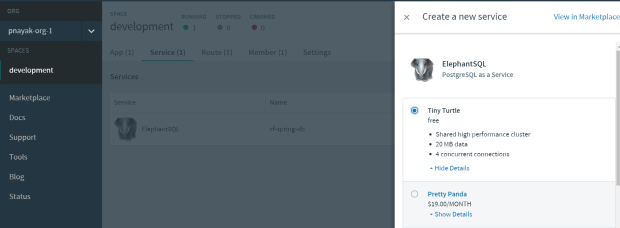

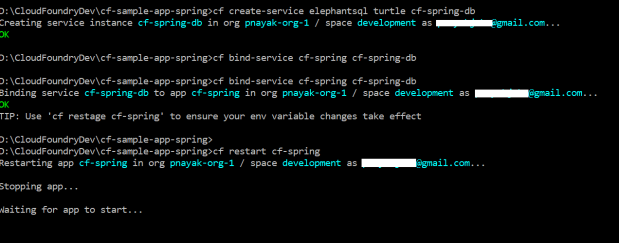

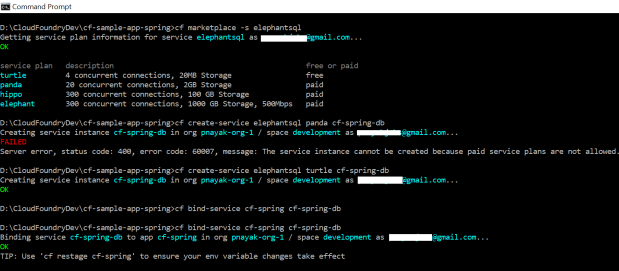

- Other dependent Services can be created and attached to the applications when and where needed a very simplified manner.

- Blue-Green Deployment can be just like any other simple and straightforward process of switching the route of the application.

- Underlying platform and application security are inbuilt to the So, your code is always secure. Security is applied with the 3’R principle, Repair, Repave, Rotate. I’ll discuss application security in another blog. Today I’ll focus on installation and Implementation of Cloud Foundry on AWS cloud.

- Dependency can be just provided as another service and can be bounded attached

- Complex Servers can be implemented using a few lines of Java code and the application can simply run on that server without any external help.

In a nutshell, Developer could say to PCF Platform, Hey, here is my code, just run it and I don’t care How.

And the platform has the intelligence to recognize the code i.e which language it is written, what dependency it has, what kind of OS its needs, so on and so forth.

And it ll provide the application all the required elements and run it in the desired way to give the output to the user. Not only it just runs the code, but it takes care of the High Availability, Scalability and Security requirement of the application without any further intervention from the developer or any other personnel during runtime.

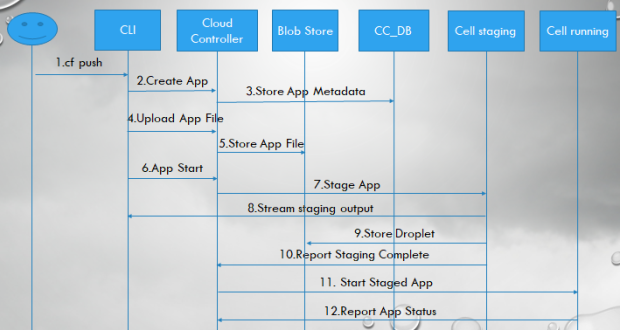

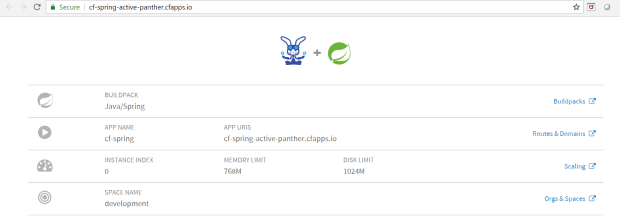

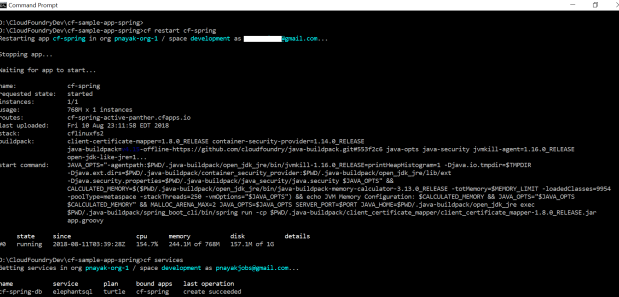

And that is done by a simple command i.e. “cf push”, these 2 magic words can take all the necessary actions required for an application to run in a Cloud Native environment.

Well for organizations to run Cloud Foundry, first they need to install and configure cloud foundry on a Cloud Environment (IaaS). The underlying IaaS can be any Private Cloud like vSphere or OpenStack, it can also be a Public Cloud Environment like Amazon AWS, Microsoft Azure or Google Cloud (GCP).

Well, it is It’s been difficult to install and configure a cloud foundry Instance on an IaaS environment and many different approaches had been applied to simplify the process.

But the latest PCF-Pipeline using Pivotal’s Concourse made it really simple and with this, we can even apply the CI/CD to the Pivotal CF Platform environment and maintain different versions of Cloud Foundry along with CI/CD for applications.

So, I am going to explain this process here. Here I am going to install Pivotal’s Cloud Foundry on AWS using Concourse CI tool.

Step 1. Install AWS CLI

Step 2: Prepare your AWS account

2.1: Create an AWS policy with the following

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"logs:*",

"elasticloadbalancing:*",

"cloudformation:*",

"iam:*",

"kms:*",

"route53:*",

"ec2:*"

],

"Resource": "*"

}

]

}3.2: Create some AWS users with the policy associated

$ aws iam create-user –user-name “bbl-user”

$ aws iam put-user-policy –user-name “bbl-user” –policy-name “bbl-policy” –policy-document “$(pbpaste)”

$ aws iam create-access-key –user-name “bbl-user”

Install bosh bootloader by the package manager

Mac OS X

$ brew tap cloudfoundry/tap

$ brew install bosh-cli

$ brew install bbl

Or you can download the installer from the following place for your OS type.

https://github.com/cloudfoundry/bosh-bootloader/releases

i.e for Linux

$ wget https://github.com/cloudfoundry/bosh-bootloader/releases/download/v6.10.46/bbl-v6.10.46_linux_x86-64

Step 3 : Install BOSH on AWS.

$ mkdir my-concourse-bbl-state ; cd my-concourse-bbl-state

$ export STEMCELL_URL=”https://bosh.io/d/stemcells/bosh-aws-xen-hvm-ubuntu-xenial-go_agent”

$ bbl up –lb-type concourse –aws-access-key-id xxxxxxxx –aws-secret-accesss xxxxxxxxxxxxxxxx –aws-region us-east-1 –iaas aws

here bbl takes a load balancer type argument as concourse, it means bbl knows the BOSH Director is created for creating a concourse environment and it ll use an AWS load balancer to load balance the traffic.

if you try bbl up –help you will find two type of value -lb-type accepts one is concourse and another is cf. Here we ll pass the value as concourse as I ll be installing cloud foundry with the help of concourse.

Go and have a hot coffee and come back this will take some time to finish.

$ eval $(bbl print-env) $ bbl director-ca-cert > bosh.crt $ export BOSH_CA_CERT=bosh.crt $ bosh alias-env bosh-1 $ bosh login

$ bbl lbs

Concourse LB: bbl-env-ti-e8080cb-concourse-lb [bbl-env-ti-e8080cb-concourse-lb-78c2a74d80d38479.elb.us-east-1.amazonaws.com]

or you can get if directly from the AWS console too.

Now if you have your own website domain you can create a hostedzon on AWS route 53 and create a A Recor Set to access the load balancer URL. I ll explain that in another blog.

here is a screenshot of my record set

After this I can access my concourse as https://concourse.lab.pnayak.com

Step 4 : Deploy Pivotal’s open source CI tool Concourse

$ git clone https://github.com/concourse/concourse-bosh-deployment.git

edit the var file ~/concourse-bosh-deployment/cluster/vars.yml according to the bosh environment just you created.for me it looks like the following

$ vi vars.yml external_host: "bbl-env-al-fde418d-concourse-lb-b15c491e218bd82c.elb.us-east-1.amazonaws.com" external_url: "https://bbl-env-al-fde418d-concourse-lb-b15c491e218bd82c.elb.us-east-1.amazonaws.com" local_user: username: "pnayak" password: "Passw0rd" network_name: 'private' web_network_name: 'private' web_vm_type: 'c4.xlarge' web_network_vm_extension: 'lb' db_vm_type: 'c4.xlarge' db_persistent_disk_type: '100GB' worker_vm_type: c4.xlarge deployment_name: 'concourse'

Note – If you have created a rRecord Set please replace the external_host and external_url to your own concourse external URL for me it is “concourse.lab.pnayak.com”.

Now before deploying the Concourse cluster we need to upload the stemcell for the instances.

So execute the following command to upload the stemcell to the bosh environment from https://bosh.io/d/stemcells/bosh-aws-xen-hvm-ubuntu-xenial-go_agent?v=170.9

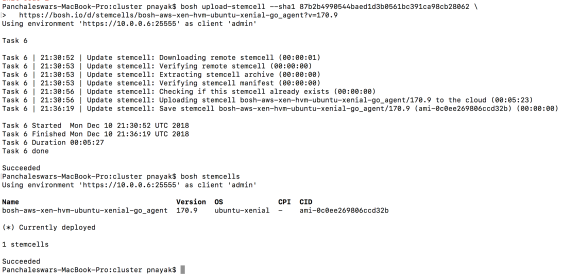

$bosh upload-stemcell --sha1 87b2b4990544baed1d3b0561bc391ca98cb28062 \ https://bosh.io/d/stemcells/bosh-aws-xen-hvm-ubuntu-xenial-go_agent?v=170.9

To achieve that execute the following bosh command

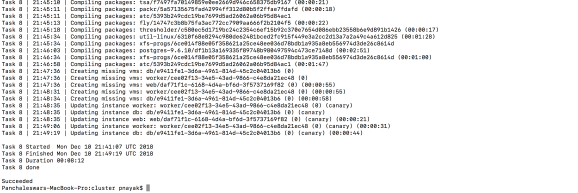

$ bosh deploy -d concourse concourse.yml \ -l ../versions.yml \ -l vars.yml \ -o operations/basic-auth.yml \ -o operations/privileged-http.yml \ -o operations/privileged-https.yml \ -o operations/tls.yml \ -o aws-tls-vars.yml \ -o operations/web-network-extension.yml \ -o worker-ephemeral-disk.yml

Cloud Config View for the concourse instances.

Concourse Environment Creation Succeeded. It Created an web server instance along with the Concourse Worker Instance and the DB instance.

Here is the AWS Console view of the Concourse and other instances along with the BOSH Director.

Now you can login to the concourse web instance as follows.

put http://concourse.lab.pnayak.com on your browser and you ll get a page like this

Login using your login username and password you entered in your vars.yml file.

if you want to login from your terminal you can do so as follows.

Install concourse CLI in your laptop or Desktop

Download concourse CLI from the URL https://concourse-ci.org/download.html

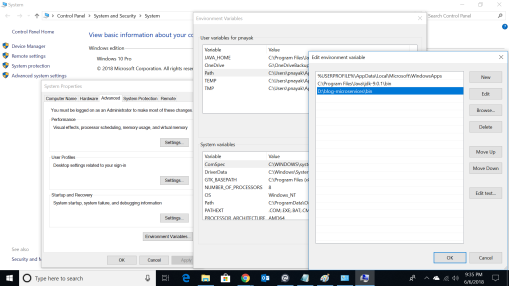

Download the CLI “fly” for your OS and put into your PATH directory.

you can test the concourse CLI named fly by typing $fly -v or $fly –help

Now to login to the target concourse environment type the following command and execute it.

$ fly -t concourse login -c https://concourse.lab.pnayak.com -k (-k should be used if you want to ignore the SSL certificate)

Now copy the output of the command and paste it on the browser to login with your username and password, once successful you can go back to your terminal and see you have successfully logged-in to concourse using fly.

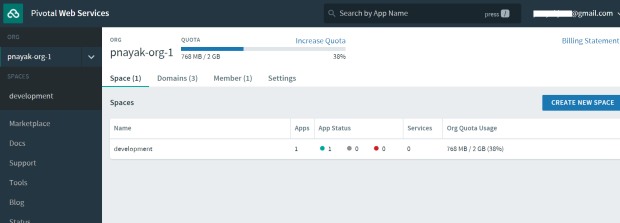

I ll cover the next section of the Cloud Foundry Installation and automation using Concourse in my Next blog please check here.

Automating Installation of Pivotal Cloud Foundry on AWS-2